I’ll admit, all of the AI chatter is finally making me a bit nervous. How much disruption is really coming our way? It just feels like so much of how we work, where we work, the very nature of work itself is changing so fast–who can keep up? On a personal level, how does anyone prepare for the career path ahead, regardless if you’re graduating college or 10 years into your job? From a founder perspective, how quickly are you adapting to the all of these AI changes in the market?

Take a look at Marc Benioff, CEO of Salesfore. He says AI now does 30% to 50% of the work at Salesforce in functions like engineering, coding, support, and service.

Meanwhile, the Census Bureau warns that the U.S. is getting, from an economic perspective, dangerously old. In 2020, just three states had more people over 65 than under 18. Now it’s eleven. In one-third of America’s biggest cities, seniors outnumber kids. So of course we’re throwing AI at the problem.

But here’s the tension every founder and CEO has to face: Replacing human work with AI might be easy. But preserving human trust, culture, and purpose is not. Technology might soon do half the work in your company—but values will decide whether you survive the other half.

The Pressure Cooker for Founders

It’s tempting to see AI as the perfect “employee” who never calls in sick, demands a raise, or needs parental leave. On paper, this fantasies make a lot of sense. Founders and VCs are pouring money into startups like Zenarate, which markets itself as a virtual AI coach for salespeople—listening to calls, analyzing objections, and auto-assigning new training based on weaknesses.

The rise of agentic AI tools—like those offered by Gong and Vidyard—shows how deeply AI is being wired into sales, marketing, and customer success. These bots can summarize every call, analyze win rates, and predict where deals are falling apart. They’re becoming the single source of truth in place of scattered human memory.

But let’s talk about what nobody wants to admit:

- We’re not just automating tasks—we’re automating away human judgment, institutional memory, and culture.

- AI may plug labor gaps in an aging economy—but it can’t replace the glue that keeps teams working together or customers loyal.

- Bots still need to be “trained” in context, ethics, and nuance—a job that ironically requires humans who know how the business actually works.

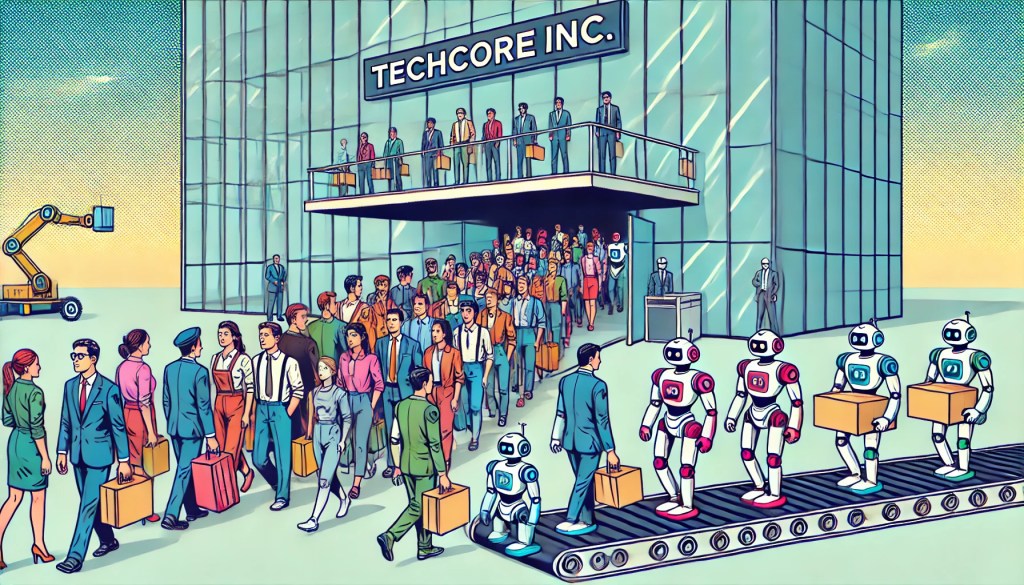

Microsoft’s Layoffs: A Cautionary Tale

This tension exploded again just days ago when Microsoft announced 1,500 layoffs affecting employees in AI, mixed reality, and cloud computing—even as the company doubles down on new AI initiatives. Microsoft called it “an organizational and workforce adjustment,” but the message is clear: even at tech’s biggest players, AI’s rise often means humans pay the price.

This uncertainty is exactly why Anthropic just launched a new effort to study AI’s economic impact. Even one of the leading AI labs acknowledges that we don’t fully understand what happens to jobs, wages, and productivity as AI replaces human labor at scale.

Right now, many leaders think deploying AI is a purely technical lift.

They’re missing the hard questions:

- Who “teaches” the bots our cultural norms, tone of voice, and customer nuances?

- When institutional knowledge leaves the company—through layoffs or retirements—who ensures the AI doesn’t repeat old mistakes?

- Are we investing in reskilling humans to work with these tools—or simply counting on layoffs to cover the savings?

Benioff jokes that one day AI might replace him, too. Maybe he’s half-kidding. But here’s the real truth:

AI might become the biggest worker in your company. But if you don’t train it—and protect the human culture around it—you’ll still end up dangerously understaffed in the things that matter most: trust, creativity, and resilience.

🌟 Why Values Matter More Than Ever in the Age of AI

In boardrooms right now, AI conversations revolve around speed, efficiency, and survival in an aging economy. Leaders like Marc Benioff are candid that bots are now doing a lot of the work. But amid the charts and cost models, one question rarely makes the agenda:

What do our choices about AI say about who we are—and what we stand for?

Even outside the tech world, brands are wrestling with the same challenge. Zara, the iconic brand is turning 50, admits it’s getting harder to sustain the same explosive growth numbers as when it was younger. It’s choosing a more selective path forward, knowing that chasing growth at any cost risks damaging its identity and long-term value.

I know. You’re thinking what does a global fashion brand have to to do with what we’re writing about?

The lesson is the same for founders deploying AI: Innovation can’t come at the cost of values—or you risk losing what made you great in the first place.

Values aren’t window dressing. They’re the guardrails that keep a culture from imploding as technology accelerates. In the rush to deploy AI, remember: technology changes what we can do. Values decide what we should do.

AI might soon be doing half the work in your company. But values will decide whether the humans left behind still trust you enough to build what comes next.

🛠 Practical Frameworks for Founders: Leading Through AI Without Losing Your Culture

The challenges we’ve explored in this blog connect directly to what we see every day working with founders. The image below comes from our own Ekipo framework on scaling smart while protecting culture.

The block that’s most connected to what we’re discussing here is “Leadership Inconsistency & Culture Drift.”

- When values get unclear during AI transitions, culture drifts.

- Mixed signals from leaders—like layoffs happening while AI investments surge—erode trust.

- Protecting your culture as you evolve is critical, whether you’re going from 10 to 100 people or from human-centric work to AI-integrated workflows.

Here’s a visual snapshot of the cultural pitfalls founders face—and how they relate to the choices you’re making about AI:

These cultural pitfalls are where many founders stumble, not because they lack vision, but because they underestimate how much trust, clarity, and human connection still matter. Yes, These challenges are complex—but you don’t have to navigate them alone.

Practical Steps to Protect Culture in the Age of AI

Fortunately, it’s not all bad news. Here are some way practical ways you can protect your culture and lead confidently through the AI transition.

✅ Quick Wins (Next 30 Days)

1. Name the Elephant.

- Acknowledge to your team that AI will change workflows and possibly roles. Uncertainty breeds rumor. Honesty breeds trust.

2. Map AI Impact Areas.

- Identify where AI tools are already replacing or augmenting human tasks.

- Ask: Is this purely about efficiency, or does it affect how we serve customers or collaborate?

3. Review “Cultural IP.”

- Inventory the unique knowledge, tone, and unwritten rules that make your company your company. Bots don’t learn this on their own.

🗓 Short-Term Actions (Next 3-6 Months)

1. Invest in Human Training, Not Just AI Licenses.

- Budget for reskilling. Partner with learning platforms.

- Think beyond technical upskilling—train people in how to work alongside AI tools.

2. Define “Human Work.”

- Create a working definition of which tasks must remain human because they involve judgment, ethics, relationships, or creativity.

3. Update Values in Practice.

- Don’t just hang values on the wall. Discuss how each value guides decisions in an AI-heavy environment:

- What does “transparency” mean if AI starts analyzing employee calls?

- What does “ohana” mean if layoffs are on the table?

🌳 Long-Term Moves (Next 12-24 Months)

1. Build an AI Governance Framework.

- Who trains the bots?

- Who audits for bias, ethics, and errors?

- How do you correct an AI that “learns” the wrong behavior?

2. Align AI Strategy to Culture and Brand.

- Ask:

- Does this AI tool reinforce or erode our customer relationships?

- Could it commoditize what makes our brand special?

- Example: Zara is choosing selective growth over reckless expansion to protect brand identity. Your AI choices should be equally selective.

3. Plan for Knowledge Transfer.

- Document institutional memory before experienced people leave.

- Use AI tools as knowledge repositories—but layer human oversight to avoid repeating past mistakes.

Beyond the Tech: What People Really Buy

As a founder, the challenge isn’t just deploying AI—it’s keeping your culture strong enough that your humans still want to stay and help build what comes next.

If there’s anything I want you to take away from today’s blog is this: Technology changes what you can do. Values decide what you should do.

Or as Seth Godin puts it:

“What we do have is agency over how we’ll thrive in a world where human work is being redefined.”

The choice isn’t whether AI will shape your business—it already is. The real choice is how you’ll lead, protect your values, and ensure that AI works for your people, not the other way around.

The Bottom Line

As a founder, leader, or investor, your competitive edge won’t just come from deploying the AI latest tools, but from building an organization where:

✅ Humans and AI work in harmony—not in competition.

✅ Cultural values guide technological choices—not the other way around.

✅ People trust you enough to follow you into this next chapter.

Technology might do half the work. But your values will decide whether your team—and your customers—stick around to build the other half with you.

Questions for Founders & VCs to consider

- Where is your AI strategy helping you scale—and where might it be eroding the very culture you’ve worked so hard to build?

- Who in your organization is responsible for “teaching” your AI systems the context, ethics, and human nuance that drive real trust and loyalty?

- If your most experienced people left tomorrow, how much of your institutional memory and cultural “secret sauce” would your AI—and your remaining team—be able to carry forward?

- What story will you tell your team and your investors five years from now about how you balanced technological progress with human values—and what did it cost you to get there?

Article was written by John-Miguel Mitchell who is the Founder and Lead Consultant at Ekipo LLC. If you’d like to learn more about how to design and build out the ideal workplace culture for your business, email him at jmitchell@joinekipo.com.

Liked the blog? Click on the subscribe button below to get new content delivered directly to your inbox every Thursday.